In the data platform space, there's a quiet trend I've been noticing more and more.

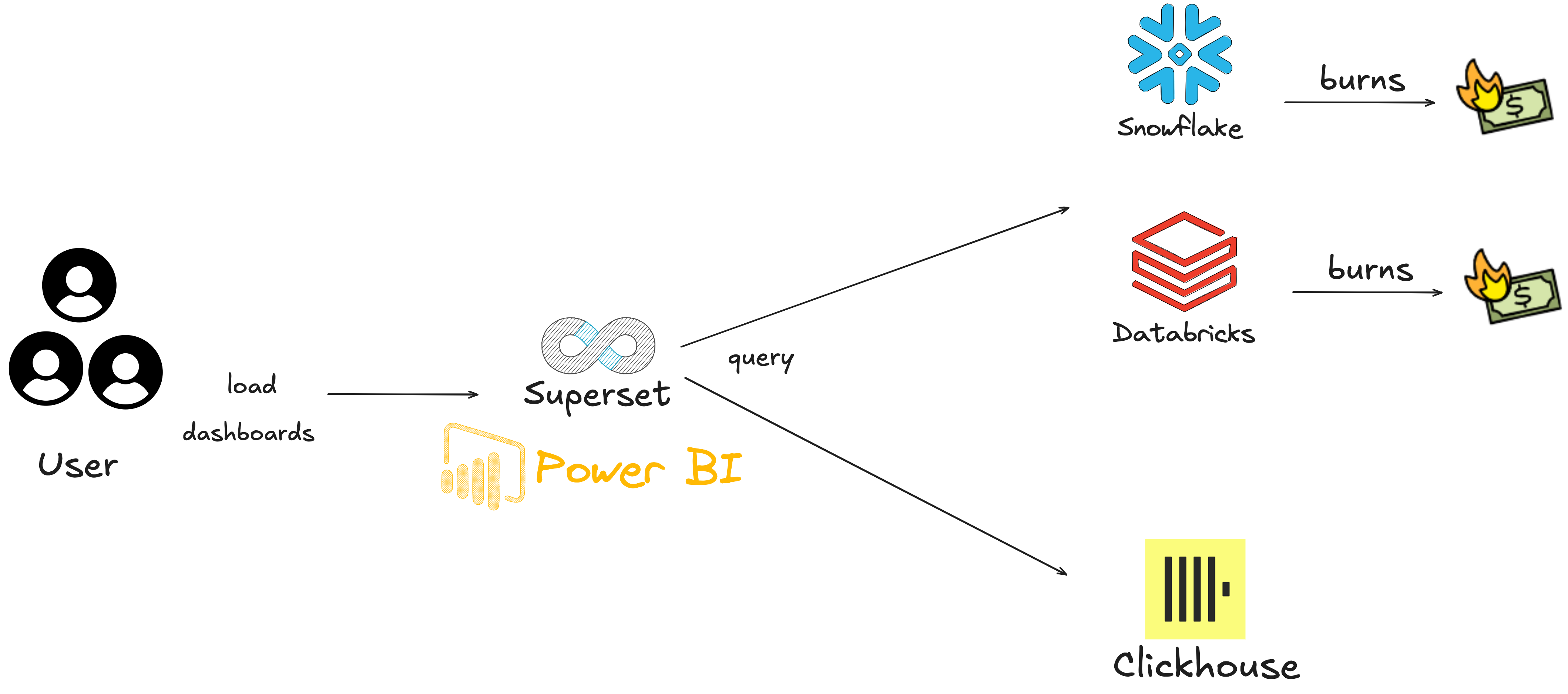

Despite the wide range of tools available today, many teams still rely on heavyweight, fully-managed warehouses like Snowflake, BigQuery, or Databricks to power frequent analytical queries. And to be fair—these platforms are robust, scalable, and packed with features. But if we're being honest, most teams use only a small fraction of what's available.

What often gets overlooked: these platforms come at a price—literally and figuratively.

When "overengineering" becomes the default

Let's take a typical scenario I've seen repeatedly:

- You've got a massive flat table, maybe billions of rows.

- It's hit by dashboards and internal tools dozens of times a day.

- Most queries are aggregations—same metrics, same filters.

What happens in these setups is that the same data gets scanned over and over again. And because compute is tied to cost, you're paying for it—every single time.

Real talk: I've worked with teams that were spending thousands per month just to power dashboards refreshing every few minutes. The irony? These dashboards weren't even real-time.

ClickHouse as a different kind of engine

That's where ClickHouse enters the picture. It's not a replacement for every data warehouse, but in the right context, it's a game-changer.

ClickHouse is columnar, super-fast, and deeply efficient when it comes to storage and querying. But what stands out most for me is how much control you get:

MergeTree Engines

Define exactly how your data is sorted and partitioned

LowCardinality Types

Compression and performance benefits for dimensions

Native Compression

ZSTD, LZ4 and others—without bloated storage bills

Key takeaway: If you're working with repetitive queries on structured data, ClickHouse will likely outperform most managed options, both in speed and cost.

Case study: Cutting costs with projections

In one project, a client had a 2.5 billion row events table in Snowflake. The most common queries filtered by date and grouped by three fields. That’s it. Same logic, every time.

We moved that workload to ClickHouse and created a projection on the common query pattern.

- That one projection covered ~60% of total queries. No app changes, no dashboard rework—ClickHouse auto-matched the queries to the optimized path.

- Latency dropped from 5–7 seconds to under 150ms.

- The bill? Roughly 10% of what it used to be.

And just to clarify: projections are not pre-aggregated materialized views you have to manage. They're part of the schema, updated alongside the base table, and ClickHouse figures out when to use them.

Real-time analytics without overcomplication

ClickHouse also handles real-time surprisingly well.

Another team I worked with had a setup where Parquet files were dropped into S3 every few seconds. They wanted fast feedback: “What’s the latest count per event type in the last 10 minutes?”

We used ClickHouse's S3 engine to ingest the data directly, added a Materialized View to pre-process incoming events, and set up an S3 notification queue to trigger lightweight inserts.

No Flink. No Spark Streaming. No Kafka. Just ClickHouse and S3—and sub-second latency on fresh data.

Some side notes that help a lot

Even outside of ClickHouse-specific features, there are patterns worth mentioning:

- Query caching: if you have a layer above ClickHouse, caching repeated queries helps a lot.

- Rewriting queries to leverage projections and indexes. Often it's small changes.

- Monitoring query logs: ClickHouse logs slow queries natively—great for spotting inefficiencies early.

And if vendor lock-in is a concern (as it should be), ClickHouse gives you options:

- You can host it yourself, or go with one of the hosted vendors (ClickHouse Cloud, Altinity, etc.).

- Backups are simple: native snapshotting and incremental backups built into the tool.

- You own the schema. You own the files. No strings attached.

Final thoughts

ClickHouse isn’t trying to be everything. It’s not a magic box that solves all your problems.

But if you have:

- Flat, wide datasets

- Repetitive or real-time queries

- An interest in cutting costs without cutting corners

... then ClickHouse is seriously worth a look.

Sometimes, going back to basics—with a powerful engine you can control and optimize—gives you the kind of performance that cloud tools promise, but don't always deliver.